Cross-validated Predictive Ability Test (CVPAT)

Abstract

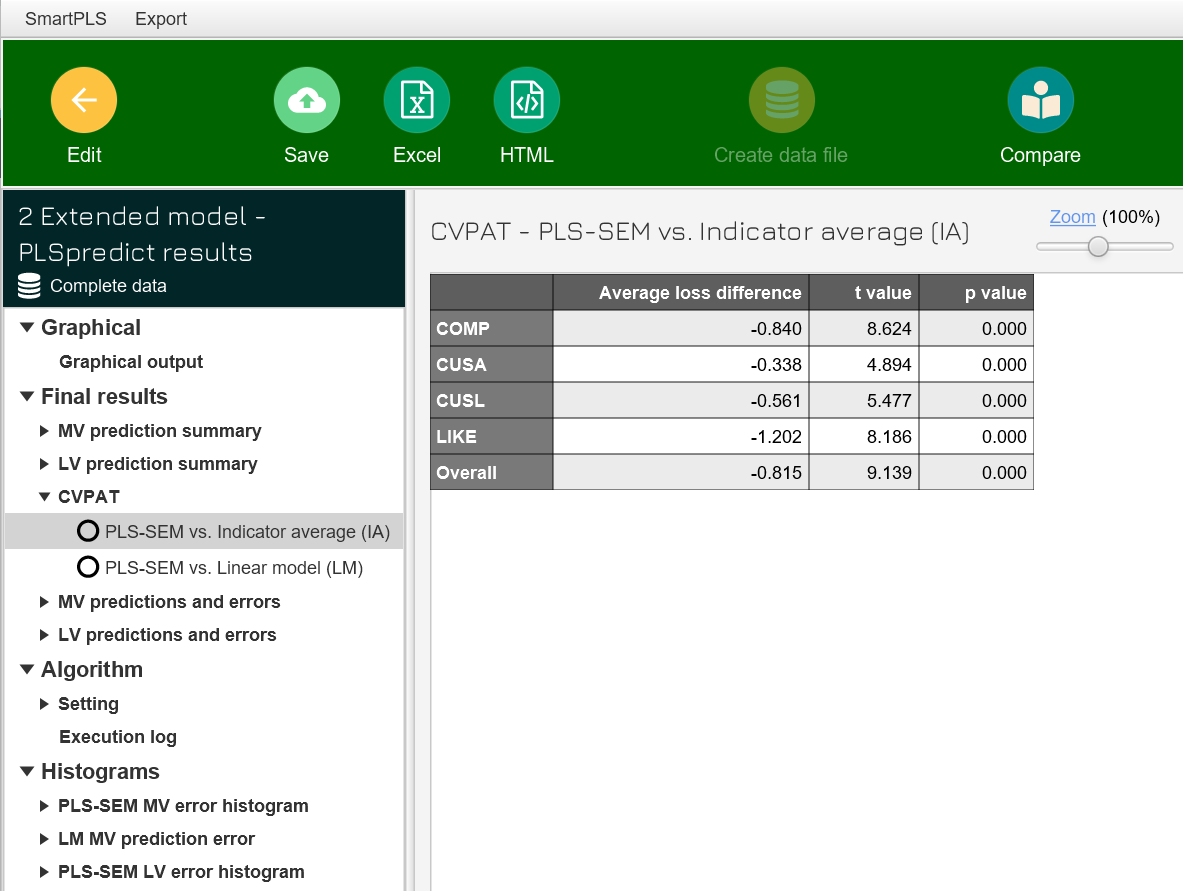

Researchers can use the cross-validated predictive ability test (CVPAT) to evaluate and substantiate the predictive capabilities of their model. In SmartPLS, the CVPAT results are available via the PLSpredict results report.

Description

The cross-validated predictive ability test (CVPAT) represents an alternative to PLSpredict for prediction-oriented assessment of PLS-SEM results. The CVPAT was developed by Liengaard et al. (2021) for prediction-oriented model comparison in PLS-SEM. Sharma et al. (2022) extended the CVPAT for evaluating the model’s predictive capabilities. CVPAT applies an out-of-sample prediction approach to calculate the model's prediction error, which determines the average loss value. For prediction-based model assessment, this average loss value is compared to the average loss value of a prediction using indicator averages (IA) as a naive benchmark and the average loss value of a linear model (LM) forecast as a more conservative benchmark. PLS-SEM’s average loss should be lower than the average loss of the benchmarks which is expressed by a negative difference in the average loss values. CVPAT tests whether PLS-SEM’s average loss is significantly lower than the average loss of the benchmarks. Therefore, the difference of the average loss values should be significantly below zero to substantiate better predictive capabilities of the model compared to the prediction benchmarks.

In SmartPLS, the CVPAT results are available via the PLSpredict results report. The IA and LM benchmark results are comparable to the Q² and LM values obtained by PLSpredict (Shmueli et al., 2016, 2019). However, while PLSpredict uses the indicators of the early antecedent constructs for the results computation, CVPAT uses the indicators direct antecedents (for further details on early and direct antecedents, see Danks, 2021).

The out-of-sample predictions used in CVPAT assist researchers in evaluating the predictive capabilities of their model. Therefore, CVPAT should be included in the evaluation of PLS-SEM results (Hair et al., 2022).

Additional procedures and extensions are under development and may become part of future SmartPLS versions (e.g., the CVPAT-based model comparison).

PLSpredict Settings for obtaining CVPAT results in SmartPLS

Number of Folds

Default: 10

In k-fold cross-validation the algorithm splits the full dataset into k equally sized subsets of data. The algorithm then predicts each fold (hold-out sample) with the remaining k-1 subsets, which, in combination, become the training sample. For example, when k equals 10 (i.e., 10-folds), a dataset of 200 observations will be split into 10 subsets with 20 observations per subset. The algorithm then predicts ten times each fold with the nine remaining subsets.

Number of Repetitions

Default: 10

The number of repetitions indicates how often PLSpredict algorithm runs the k-fold cross validation on random splits of the full dataset into k folds.

Traditionally, cross-validation only uses one random split into k-folds. However, a single random split can make the predictions strongly dependent on this random assignment of data (observations) into the k-folds. Due to the random partition of data, executions of the algorithm at different points of time may vary in their predictive performance results.

Repeating the k-fold cross-validation with different random data partitions and computing the average across the repetitions ensures a more stable estimate of the predictive performance of the PLS path model.

References

- Danks, N. (2021). The Piggy in the Middle: The Role of Mediators in PLS-SEM-based Prediction. ACM SIGMIS Database: the DATABASE for Advances in Information Systems, 52, 24-42.

- Hair, J. F., Hult, G. T. M., Ringle, C. M., & Sarstedt, M. (2022). A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM) (3 ed.). Thousand Oaks, CA: Sage.

- Liengaard, B. D., Sharma, P. N., Hult, G. T. M., Jensen, M. B., Sarstedt, M., Hair, J. F., & Ringle, C. M. (2021). Prediction: Coveted, Yet Forsaken? Introducing a Cross-validated Predictive Ability Test in Partial Least Squares Path Modeling. Decision Sciences, 52(2), 362-392.

- Sharma, P. N., Liengaard, B. D., Hair, J. F., Sarstedt, M., & Ringle, C. M. (2023). Predictive Model Assessment and Selection in Composite-based Modeling Using PLS-SEM: Extensions and Guidelines for Using CVPAT. European Journal of Marketing, 57(6), 1662-1677.

- Shmueli, G., Ray, S., Estrada, J. M. V., & Chatla, S. B. (2016). The Elephant in the Room: Predictive Performance of PLS Models. Journal of Business Research, 69(10), 4552-4564.

- Shmueli, G., Sarstedt, M., Hair, J. F., Cheah, J. H., Ting, H., Vaithilingam, S., & Ringle, C. M. (2019). Predictive Model Assessment in PLS-SEM: Guidelines for Using PLSpredict. European Journal of Marketing, 53(11), 2322-2347.

- More literature ...

Cite correctly

Please always cite the use of SmartPLS!

Ringle, Christian M., Wende, Sven, & Becker, Jan-Michael. (2024). SmartPLS 4. Bönningstedt: SmartPLS. Retrieved from https://www.smartpls.com